The Forbidden Byte: In the Garden of Silicon, is Apple Tempted by Google’s Gemini?

This week in AI: Apple might use Google Gemini, Figure AI’s humanoid can hear, see, and speak, Nvidia's GTC Conference, Grok is now available, and the world's first AI law passes in the EU.

Watch the video de-brief if you don’t have time to read!

Advancement #1: Apple in Conversations to use Google Gemini in Upcoming iPhones

On Monday, Bloomberg reported that Apple is in conversations to license Google’s Gemini model to power a range of cloud-based (off device) AI features in future iPhones. Apple has also reportedly had similar discussions with OpenAI.

While Apple's team of AI/ML researchers just released a research paper this month on the creation of a series of multimodal models named MM1, Apple’s ongoing conversations to collaborate with Google strongly indicates that Apple is not as far along in its Generative AI efforts as many hoped. (According to the paper, MM1 is capable of understanding and producing various forms of data, including text and images simultaneously, as well as advanced reasoning).

The terms of the agreement have not been finalized, and are unlikely to be confirmed until Apple’s annual WWDC (Worldwide Developers Conference) in June.

My Initial Thoughts: Apple has been suspiciously quiet about Generative AI over the last year, but Apple’s government security level of secrecy is expected for me. With the launch of their open-source LLM Ferret, as well as Tim Cook’s comments to the boards last month that they will “break new ground on Generative AI this year”, I thought they would’ve been further along.

While I understand Apple’s predisposition towards Google instead of a Microsoft/OpenAI package (their historical public enemy #1), the only part of Gemini that current meets Apple privacy benchmarks is Gemini Nano. It can run locally, and, as Apple’s Head of AI stated a few years ago, running any other way is “technically wrong”.

As the reports are saying they would power cloud-based AI solutions, maybe they changed their mind. This would also raise the question of what the difference between an Apple iPhone vs Android is if it’s running the same AI technology.

Overall, this doesn’t make sense to me. If this kind of deal goes through, it’s a clear indicator Apple missed the mark and may be going into another era. AI is arguably one of the most important innovations of our lifetimes, and if Apple publicly begins to license one of its competitor’s AI, I don’t believe the company will ever recover.

Advancement #2: FigureAI’s Humanoid Robot Can Hear, See, and Speak Like a Human

A few weeks ago, I reported that Figure AI announced a partnership with OpenAI. This week, we saw a glimpse of what that collaboration effort looks like. FigureAI released an amazing demo video that its humanoid robot could see, hear, and speak like a human.

Figure 01 displayed amazing capabilities, including:

Recognition of objects

Understanding surroundings

State reasoning behind the tasks performed

Use conversational knowledge and even add human cadence words such as “um”

Moves and speaks with significant fluidity.

The Figure 01 robot is fully electric, 5’6” tall, weighs 60 kilograms with a 20 kg payload, and runs for 5 hours per charge.

My Initial Thoughts: I vote that every humanoid robot demo should be in a messy kitchen with kids running around.

I think AGI is a lot closer than we might think, and we're currently lucky that Figure AI has not mastered the manufacturing aspect yet. However, as they expand their operations in the next few years, we're drawing closer towards the dystopian future that we’ve been warned about throughout the 21st century. It's evident that they possess a highly skilled team of engineers, led by an exceptional leader, and guided by a clear vision. I simply worry that we are not being responsible by preparing adequately for the rollout of such technologies.

Advancement #3: Nvidia Advances 2 Major Steps in the AI Race at their GTC Conference

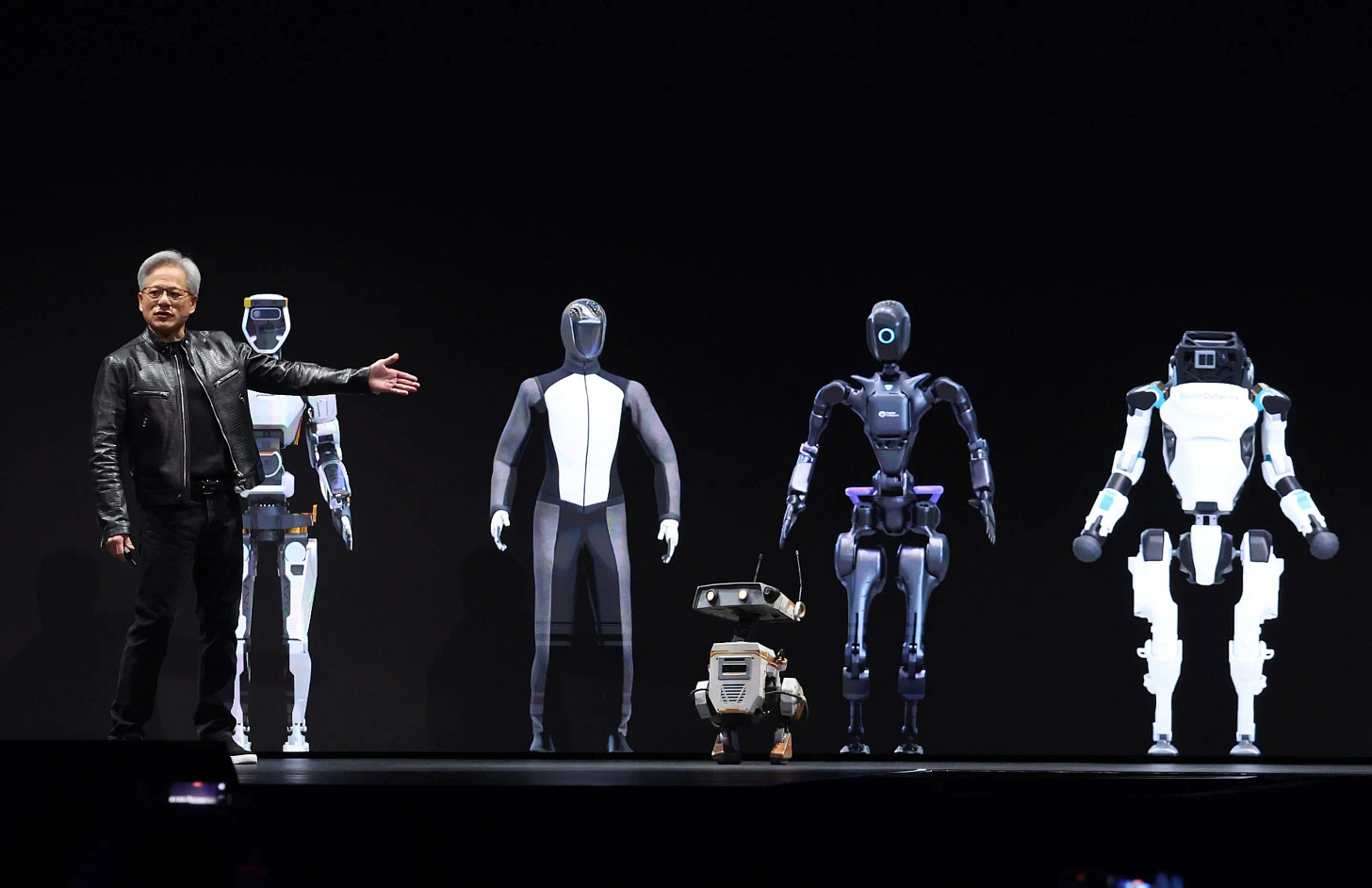

Nvidia's founder and CEO, Jensen Huang, unveiled remarkable breakthroughs at their GTC conference keynote.

Nvidia introduced expanded partnerships (including Mercedes-Benz and launched Omniverse for the Apple Vision Pro), alongside announcing two revolutionary innovations that significantly advance AI.

Nvidia’s Humanoid Robots

Nvidia introduced "Project GR00T," a foundational model for humanoid robots, which Huang heralded as the "next wave" in AI and robotics.

In an amazing, live demo featuring Project GR00T robots, Huang explained that robots powered by GR00T, an acronym for Generalist Robot 00 Technology, are engineered to mimic and learn movements by watching humans and can comprehend natural language. These robots are driven by the innovative Jetson Thor robotic chips.

Blackwell B200 Chip

Nvidia unveiled the Blackwell processor chip and platform, currently the "world's most powerful chip," that will lead to "a new era of computing" with its composition of 208 billion transistors. Blackwell surpasses its forerunner, Hopper (which currently powers the major LLMs), in significant ways:

It operates a GenAI large language model (with a trillion parameters) at 25x lower cost and energy consumption;

It accelerates AI training by 2.5 times;

It increases the speed of inference (the ability of AI models to make deductions from new data) by 5 times.

Nvidia has also developed an infrastructure that enables the interconnection of multiple GPUs (similar to lego blocks), empowering organizations to merge several distinct GPUs into a singular, expansive GPU (or a superchip). The Blackwell computing platform and the Blackwell B200 chip are set to provide AI developers with enhanced computational capacity for training AI models on multimodal data.

Major corporations such as Google, Meta, Microsoft, OpenAI, and Tesla are eager to integrate the Blackwell technology promptly. Nvidia dominates this sector, holding a 90% market share in the chips designed for use in Large Language Models (LLMs).

My Initial Thoughts: To me, this was the biggest advancement this week even if it didn’t win the headline of the article. The advancements announced by Nvidia took a monumental step forward in AI.

To train and launch AI models at the scale we need in order to get the results we want, we need a lot more GPU than what we had. Of course, a natural evolution would be to build a more powerful chip, but what makes this chip launch so incredible is achieved Jensen’s Law: the ability to double thousand times compute every 8 years. That’s an incredible thing when you think about what it takes to get AI to the next level.

As for Project GR00T, I was completely stunned silent when I watched the live demo. The way they were reacting to voice command and their movements truly felt like it was out of a movie (they even introduced two robots that moved similar to Disney robots like Wall-E). I can honestly say I never felt that way about any other humanoid demo up until that point.

Lastly, a big part of this keynote is that it’s clear that Nvidia is no longer just a chip making company… it is also transitioning into a software company. In the long term (6-8+ years from now), this shift could likely be a larger part of the business structure than the chip making side. Companies that can build software on these new ecosystems and infrastructure are the ones that can actually make the AI dream come to life.

Advancement #4: Elon Musk’s Open Sourced LLM Grok-1 Officially Released

Grok-1 has officially become available on Github under the Apache 2.0 license, meaning anyone is free to modify and re-distribute. Being massive at 314b parameters, Grok-1 is the largest open-sourced model currently available.

Grok-1 is a MoE (Mixture of Experts) model that was trained from scratch by xAI using Rust, JAX and a custom training stack. In addition, the base model is not fine-tuned for any particular task. The code of conduct in the documentation accompanying Grok’s release says only “Be excellent to each other”, an apparent reference to the 1989 sci-fi comedy Bill & Ted’s Excellent Adventure.

My Initial Thoughts: If you’re not familiar with what MoE is and its difference between the standard neural network infrastructure currently leveraged in LLMs, it basically is a family of smaller neural networks that are connected, but only firing up certain parameters based on the knowledge need as opposed to activating the whole network. It’s much more efficient and is definitely the future (Google’s Gemini is a MoE model as well). I covered it a little bit in more detail in a past blog post, which you can find here.

I still do wonder why Musk is doing this. Is he truly dedicated to open-sourcing AI technology?

Advancement #5: The World’s First Law on Artificial Intelligence Passed in the EU

The EU's AI Act passed last week, marking a significant milestone in the regulation of artificial intelligence technology worldwide. Set to go into effect in May, it introduces the first legal framework aimed to safeguard fundamental rights, promote transparency, and increase accountability in AI usage.

Key points include:

Categorization of AI Systems: AI technologies are classified into categories ranging from "unacceptable" to low, medium, and high risk, with specific regulations tailored to each category. The most advanced AI models face stringent requirements, including risk assessments, cybersecurity measures, and incident reporting. Non-compliance could result in fines or bans in the EU.

Restrictions and Bans: The Act imposes strict bans on practices deemed high-risk to fundamental rights, such as untargeted scraping of facial images and emotion recognition in workplaces and schools.

Transparency Requirements: Companies must disclose when AI is being used and label AI-generated content.

Obligations for AI Companies: Model developers like OpenAI must document the development process, explain their steps in how they are respecting copyright laws, and summarize the training data used. Free, open-source AI models are exempt from this requirement.

However, the AI Act has faced significant criticism. Opposers argue that it provides loopholes for government surveillance and excessive use of sensitive biometric data by law enforcement. This critique is encapsulated in the concern that the Act might serve more as a guide for implementing biometric surveillance rather than preventing it. Critics also highlight the potential for the Act to be undermined by industry lobbying and exemptions that weaken its intended prohibitions. Amid this feedback, the U.N. General Assembly announced it’s adopting its first resolution on artificial intelligence this week (initiated by the U.S) aiming for global AI standards that ensure safety, security, and trustworthiness.

This legislative effort by the EU, coupled with the UN's consideration of a resolution on AI, reflects a growing global consensus on the need for regulatory frameworks that can harness the benefits of AI while mitigating its risks.

My Initial Thoughts: The EU's Artificial Intelligence Act leave me with feelings of skepticism, primarily due to its broad scope and potential loopholes that might not enforce much change, especially in the sensitive area of biometric usage. Personally, as someone who avoids biometric scans at all costs for privacy concerns, the legislation's allowance for biometric data usage by law enforcement opens avenues for increased mass surveillance. In addition, the potential for industry lobbying to circumvent the stringent requirements raises doubts about the effectiveness of the Act. While its goals are commendable, the reality of its implementation could fall short, allowing for the continuation of practices it seeks to regulate, thus not fully safeguarding citizens' rights and privacy as intended.

I hope I am wrong, but I will be pleasantly surprised if EU citizens see ANY downstream effects of this legislation that is useful.

PLEASE REMEMBER TO SUBSCRIBE TO MY YOUTUBE CHANNEL, PLEASE :-) SEE YOU ALL NEXT WEEK!