OpenAI's Data Breach and the USA's Risk of Chinese AI Attacks

Plus, Open AI ban models from Chinese market, Microsoft's security attack by China, NATO updates AI strategy, and two new AI apps worth knowing about.

This week in AI, OpenAI isn’t so open after all after we learn about a security breach a year ago that which was never reported. But what we’re really going to focus on is the risk of security breaches to AI companies because of China, as most of the main updates this week weirdly touched China alot

Watch the video below.

We’ll also briefly talk about NATO’s revised AI strategy, and my two favorite AI apps that were launched (AWS App Studio and Anthropic’s Prompt Playground).

Let’s get into it.

#1 OpenAI’s & Microsoft 2023 Data Breaches, and Why China is in the Discussion

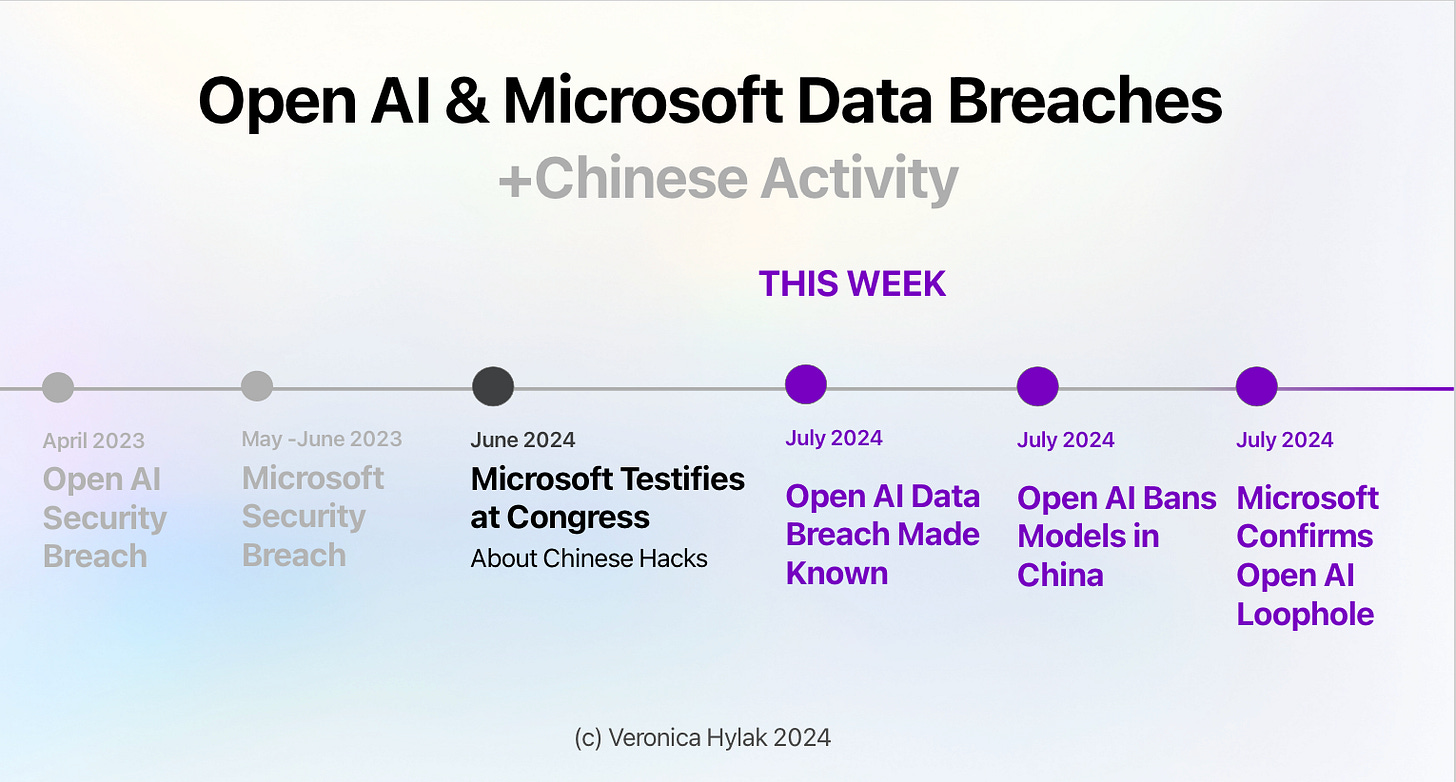

This week, anonymous insiders revealed to the New York Times that OpenAI had a data breach in April 2023, but the company kept it secret because allegedly no customer or partner info was stolen, nor was their AI infrastructure backed by Microsoft penetrated.

Open AI executives didn't see it as a national security threat since they believed the hacker was just a private individual with no ties to a foreign government, and therefore decided not to inform the FBI or law enforcement. Some OpenAI employees didn’t agree, and were worried of their increased risk foreign adversaries, like China, stealing AI technology and endangering U.S. security.

Concerns about Chinese hackers aren't unfounded, because a few weeks ago Microsoft's president testified on the hill that a hacker group linked to the Chinese government used their infrastructure to attack federal government networks only a month after OpenAI’s breach. They hacked Microsoft’s email systems in May and June 2023, stole over 60,000 emails from the State Department’s network, and accessed accounts of 22 organizations and over 500 people (including Commerce Secretary Gina M. Raimondo and the U.S. ambassador to China, Nicholas Burns).

Now, here's how it all comes full circle.

OpenAI officially left the Chinese market this week, and although businesses in China are now scrambling for alternatives, they might not need to worry. Microsoft also confirmed this week that there's a loophole that allows Chinese entities access to OpenAI’s models through its Azure cloud service.

Here’s the 30 second wrap up of what I just covered.

This week it was confirmed that OpenAI had a data breach in April 2023, which they didn't report to the FBI because executives thought the hacker acted alone and didn't access their Microsoft-backed models.

However, just a month later, Microsoft faced a major Chinese espionage attack on U.S. government entities, accessing confidential and possibly encrypted emails. .

Despite the growing concern of Chinese security breaches like both of these, Microsoft confirmed they will use a loophole to continue offering OpenAI services to Chinese entities…

Even though OpenAI officially left the Chinese market on July 9.

My Initial Thoughts: I was completely lost trying to figure out this week's news at first, because China was mentioned in higher volume than normal in a lot of different places, and they didn’t seem connected at all, but then it clicked for me. It seems strange that the news outlets are not making connections between all of these Chinese reports this week.

This is all crazy to me.

OpenAI absolutely should’ve reported it to the FBI - I didn’t realize board room executives were able to conduct those sorts of government investigations, and can we even believe that only internal communication apps were compromised? Can we actually believe that our data wasn’t?

China's catching up fast and building AI that’s nearly as powerful as the leading US systems, and have half the world’s leading AI researchers, so this is threat is absolutely real, it’s already happened, and I personally believe both of these breaches could strongly be related.

#2 NATO’S Revised AI Strategy

On July 10, NATO unveiled its revised AI strategy that aims at accelerating the implementation of AI within the alliance in a safe and responsible way.

This updated plan builds on the 2021 strategy *launched pre-ChatGPT) incorporating recent advances like generative AI. While the full strategy plan was not released, a statement highlighted enhancing interoperability between AI systems across member nations.

A significant addition to this strategy is the recognition of AI-enabled disinformation within political climates, but despite the risks, NATO states it’s critical they leverage GenAI to maintain a strategic edge.

Inspired by ongoing AI military efforts in the U.S., including the Defense Department's Task Force Lima, the Intelligence Community's offline LLM that can be queried like ChatGPT, the Air Force's NIPRGPT tool, NATO is committed to adopting these technologies swiftly to ensure it remains at the forefront of AI advancements.

My Initial Thoughts: It's not surprising that NATO has updated its AI strategy, given the longstanding military use of AI (I was personally working with AI with the DoD and Army in 2019, and they’ve used it way longer than that). What is concerning is the emphasis on "interoperability" among AI systems across NATO states. It suggests a move towards potentially integrating AI capabilities into a unified intelligence network among member nations. While this could enhance coordination and efficiency, it raises questions about data security, sovereignty, and the broader implications for international relations in the AI era.

#3: My Two Favorite AI Application Updates This Week

AWS App Studio: Building Apps with Generative AI

At the AWS Summit in New York they announced App Studio, which allows non-developers to allegedly build full software applications with prompts in minutes instead of hiring an entire development team. The example they gave is if you need an app to track inventory, you can describe your needs to App Studio, and it will generate the app for you with a user interface, data model, and routing system. Plus, it creates mock data so you can see how it works without worrying about the underlying code. Deployment, operations, and maintenance are all handled by App Studio, ensuring your application is secure and scalable.

Anthropic’s Claude: Prompt Playground for Better AI Apps

Anthropic’s Claude has introduced a new feature called the Prompt Playground to help improve AI applications and their output quicker. Language models can be really tricky, as there’s not known methodology of how to optimize the output and small changes in how you word a prompt can lead to huge differences in results. So instead of wasting significant time fighting that battle, this new feature provides quick feedback to help you optimize your prompts. Located under the new Evaluate tab in Anthropic Console (which is the testing workspace for developers) Evaluate is making it easier to find and implement prompt improvements.

My Initial Thoughts: Not much to say about Anthropic’s Prompt Playground, just is super helpful, but the AWS App Studio, well it sounds amazing but being in software all these years, and knowing the output quality of GenAI code generators, yeah.. I don’t see it working very well.

Maybe a single page app, but the maintenance of software systems is a completely other cost, hosting can add a lot of money, what if you want to change something about the app that was created, I just see this as a great step one but nothing that can be super viable yet.

PS: AI Data Privacy Seminar August 8. Register here, and hope to see you there!