Meta AI Assistant and the Severe Risk to Your Data Privacy

This week in AI: Llama 3 launch and Meta AI Assistant, Boston Dynamic's New Atlas Humanoid is Fully Electric, Google Launches Prompting 101 Guide, and Microsoft's VASA-1.

Don’t have time to read? Watch the de-brief.

Advancement #1: Llama 3 Launch, Meta AI Assistant (ChatGPT Competitor), and the Risk to Your Data Privacy

4 days ago Meta launched Llama 3, a new multimodal version in two sizes: 8b and 70b parameters. Its performance is being compared to smaller sized models such as Gemini Pro 1.5 or Claude 3 Sonnet (outperforming both), with allegedly enhanced performance in understanding language nuance, translation, and executing multiple tasks at the same time. It also can generate images and code like its predecessor.

The most remarkable aspect of this launch, and curiously overlooked, is the debut of Meta's AI assistant. Including a public interface like ChatGPT at website meta.ai, the assistant has been woven into the fabric of every app in our daily use — including Facebook, Instagram, WhatsApp, and others.

What does this mean? Meta virtually launched ChatGPT within all of its apps.

It enables users to search conversation histories, ask for answers or advice, and create images. The assistant can also curate app content, such as searching certain types of reels on Instagram, and offer user’s personalized recommendations. For example, want to find a dinner spot that you and your friends are going to like? Meta AI Assistant can help you without much context.

This launch reveals the fact that Meta is the first company positioned to revolutionize user interaction by leveraging individual user data to inform its AI. This isn't a traditional stateless model; it’s a deep contextual engine designed to understand two decades' worth of user behavior and preferences to help you execute most everything.

Since Llama 3 (an open-source platform) processes user data to enhance models, Meta launched Llama Guard 2 to address potential privacy and ethical concerns. This system sets up a structured deployment strategy to monitor and regulate model usage. Complementing this, Cybersec Eval 2 introduces stricter protocols to guard against the misuse or exploitation of model restrictions such as jailbreaking.

My Initial Thoughts: Llama 3's open-source approach combined with the inherent jail-breaking risks exposes our data to unprecedented threats. "Free" services come at the cost of our privacy, and consent now feels like a façade.

For instance, a few days ago I saw a tiny popup on Instagram linking my continued use to agreement with Meta AI's privacy terms, yet no direct consent was given. I searched for days without ever really finding a clear idea of what I just passively agreed to, all while the Meta AI assistant feature had already integrated itself into my user experience without explicit approval. I did look to Meta’s general privacy policy for some clues on how the data could be used (which was probably the most complicated data privacy policy I have ever read in my life), and some of the things I found there were horrifying. The biggest one?

By using meta products, we are granting the un-restricted license of our data, photos, posts, videos, etc to use and distribute.

“You grant us a non-exclusive, transferable, sub-licensable, royalty-free, and worldwide license to host, use, distribute, modify, run, copy, publicly perform or display, translate, and create derivative works of your content (consistent with your privacy and application settings). This means, for example, that if you share a photo on Facebook, you give us permission to store, copy, and share it with others (again, consistent with your settings) such as Meta Products or service providers that support those products and services. This license will end when your content is deleted from our systems.” (but there’s a catch… watch my Youtube video).

There were many other concerning pieces, such as their right to use the contents of your entire camera reel if you have enabled full access, the potential for model trainers to access your personal information, and their right to use your voiceprint among others, but this launch has raised my largest concerns of data privacy to date. The access to 'free' services could be at the expense of our data freedom, and Meta constantly emphasizes they are free within their privacy policy to make us feel like we agreed to this.

With many users now questioning the value exchange of personal data for digital services, a trend towards deactivating social apps is emerging. Without a clear option to opt-out of Meta AI, I’m also considering whether the benefits are worth the privacy trade-off.

While Meta has minimal data privacy safeguards, it does introduce a measure of user control by creating the ability to erase information shared during any AI-assisted chat on Messenger, Instagram, or WhatsApp. You just need to type “/reset-ai” to set it back to its default state.

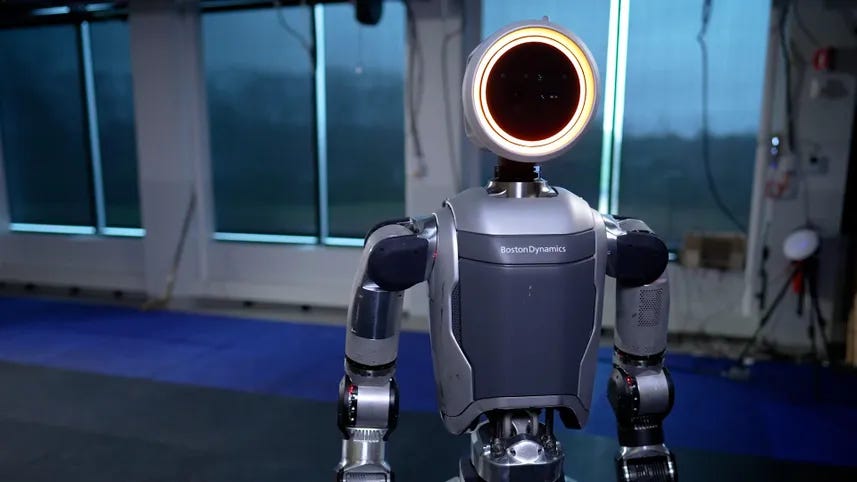

Advancement #2: Boston Dynamics Introduces All Electric Version of Their Humanoid Robot Atlas

Boston Dynamics, a leading robotics company, has introduced a new all-electric version of "Atlas," their humanoid robot. This announcement, made from their Waltham headquarters, coincided with the retirement of the previous hydraulic model of Atlas. Currently in the research and development stage, this new model is anticipated to be stronger, more agile, and more dextrous, with an increased range of motion and more practical real-world applications. Hyundai Motor Group, which acquired Boston Dynamics in 2021, will be the first to test this advanced robot.

A demo video of the new electric version can be seen here.

My Initial Thoughts: Boston Dynamics is the OG company in the humanoid robot race. They’ve been at this for decades, so while many competitors (including Figure AI and even Nvidia) continue to pop up, Boston Dynamics has notoriously been the company to aspire to. The movements of all of their robots seem to be lightyears ahead of the other ones, though they haven’t released any sort of indication of whether they can talk like the other demos we’ve seen recently.

At the end of the day, a humanoid’s ability to execute tasks will be the determining factor of who wins this race, and Boston Dynamics stated it wants to spend more time focused on improving executing tasks especially with extremities.

Advancement #3: Google Launches a Prompting 101 Guide

Google has released a "Prompting 101" guide for Google Workspace users. This guide is designed to help users master the basics of writing effective prompts to improve productivity and efficiency when using Gemini, the AI feature integrated into Google Workspace. The guide covers foundational skills tailored to various roles and use cases, emphasizing best practices. It allows users to better collaborate with AI in real-time on familiar platforms like Gmail, Google Docs, Sheets, Meet, and Slides, enhancing tasks such as writing, organizing, and visualizing data without compromising privacy or security.

Don’t think this will be helpful only for Google Workspace users. It’s a great guide for approaching prompting for all GenAI environments. This guideline is free and can be accessed using this link.

My Initial Thoughts: This prompt guide is one of the first of its kind that I’ve seen, and really contains some helpful information relating to specific personas such as marketing, business decisions, etc. Since prompting is an art, the core foundational concepts they referenced I already teach in my uDemy course (so they’re not groundbreaking) but this is definitely an amazing tool to be able to copy and paste some templates to execute better prompts as a whole.

Advancement #4: Microsoft’s VASA-1

Microsoft Research Asia has developed VASA-1, an AI model that can generate synchronized animated videos of people talking or singing using just a single photo and an audio track. This technology has potential applications in creating virtual avatars for video conferencing or other real-time uses, as it can operate locally without the need for live video feeds. The model, which has been trained on the VoxCeleb2 dataset featuring over a million utterances from YouTube videos of 6,112 celebrities, is capable of producing high-resolution videos (512x512 pixels) at up to 40 frames per second with minimal latency.

This raises possibilities for its use in various communication platforms, as well as concerns about misuse for creating deceptive or misleading content. Examples were published on a Microsoft Research page.

My Initial Thoughts: We’ve seen a lot of these image to video generation tools recently, and I think this has been one of the better ones on par with maybe Synthesia and HeyGen. The biggest thing I personally noticed was their lack of natural eye movements more than anything, so if you’re trying to spot a deepfake, I’d look at the eyes first.

Housekeeping

Got an amazing uDemy course with 3 hours of content and you should check it out. :-)