12 Days of OpenAI Made Simple (SPECIAL ARTICLE)

An overview of everything that was launched during the 12 Days of OpenAI, categorized into red, yellow and green depending on the impact of the release.

From $200 subscriptions to AI Santa Claus, OpenAI threw everything at us for 12 days—literally. Were you overwhelmed like I was? Catch up on what you missed.

Heads up before we jump in—it's obvious OpenAI had a backlog of features they needed to ship, so I’m calling this the 12 Days of Shipmas because, let’s face it, that sounds better than 12 Days of OpenAI. To keep things simple, I’m categorizing each launch as Green, Yellow, or Red. Red means it’s a game-changing, high-impact update, while Green means... well, nothing really changed. Let’s get into it!

As always, video is here:

Day 1: ChatGPT launched a $200 subscription (Yellow)

Day 1 of Shipmas introduced ChatGPT Pro, a $200 monthly subscription tailored for power users who need OpenAI's most advanced AI capabilities. I know… $20 to $200. Seems logical… I suppose.

This plan includes unlimited access to OpenAI’s smartest model, o1, along with o1-mini, GPT-4o, Advanced Voice, and the premium o1 pro mode. The standout feature, o1 pro mode, uses significantly more compute power to think harder and produce more reliable, accurate responses, particularly in high-demand areas like data science, programming, and case law analysis.

The price jump is because of the additional compute resources required to power o1 pro mode. OpenAI has focused on delivering "4/4 reliability”, basically getting the answers right every time. How is this determined? Basically a model isn't just evaluated on getting a question right once—it's only considered to have "solved" a question only if it gets the correct answer four out of four times ("4/4 reliability")… so this price jump isn’t just about getting answers faster, but rather about precision.

For those tackling the toughest problems in math, science, coding and if reliability matters to your work, Pro mode might be for you.

My Initial Thoughts: I’ve said it before, and I’ll say it again—reliability is the name of the game when it comes to AI. While I haven’t personally tried the $200 subscription (which, let’s be honest, costs more than my car insurance), I can see the justification for the cost. More intense compute power typically equals smarter, reliable outputs. But this isn’t without its drawbacks. Increased compute power comes with a hefty energy demand, and it raises a bigger concern—are we widening the AI accessibility gap? Corporations might barely notice the cost, but for the average user, it risks making AI feel like a gated luxury.

Day 2: Open AI’s Reinforcement Fine-Tuning Research Program (Green)

Day 2 of Shipmas was boring, which introduced the expansion of OpenAI’s "Reinforcement Fine-Tuning Research Program”. The program’s goal is to help developers create expert models for complex, domain-specific tasks with minimal data. The customization protocol naturally leverages reinforcement learning, allowing models to learn and improve reasoning by practicing and receiving feedback on high-quality tasks. The program targets research institutes, universities, and enterprises in fields like law, healthcare, finance, and engineering, and you have to apply, so not super relevant to most of us.

Day 3: Sora Was Officially Released to the Public (Red)

On Day 3, OpenAI finally publicly dropped Sora, OpenAI’s text-to-video model. The new Sora Turbo version is faster than the preview shown back in February 2024 and allows you to create 1080p videos up to 20 seconds long from text descriptions or by remixing existing assets. It also includes features like a storyboard tool for precise frame-by-frame editing, and allows you to choose between widescreen, vertical or square formats. Its UI is very similar to Midjourney, where your generation request is queued and you see the feed of what others in the community are making.

Since OpenAI held off on releasing Sora for 10 months because of safety concerns, naturally a lot of protections were put into place. All Sora videos come with metadata and visible watermarks so people can tell where they came from. You can’t upload videos of people while they work on better deepfake protections, and harmful stuff like child exploitation and sexual deepfakes are blocked.

Sora’s not perfect yet—it struggles with realistic movement and longer videos—but OpenAI’s putting it out there now so people can try it and figure out how to use it responsibly. It’s available to ChatGPT Plus and Pro members.

My Initial Thoughts:Announcing something almost a year ago and only making it public now, makes me feel as a startup owner like they were buying time to finish or refine it. Sure, they said it was about AI safety, but I don’t think adding those safeguards should’ve taken this long. That said, the videos Sora generates are seriously sharp, and I’ve already started playing around with it to create social media campaign content.

Day 4: Canvas: Your Editable Workspace (Yellow)

Day 4 introduced Canvas, a tool that takes collaboration with ChatGPT beyond a chat interface for the first time by allowing you to create and refine ideas side by side. Canvas offers a dynamic interface for writing and coding, allowing users to directly edit, track changes, and even run scripts. Integrated with GPT-4o, it includes features like focused edits, adjusting text length, and debugging code.

This is considered an early beta product but it rethinks how users interact with AI by integrating it more into a workspace environment.

My Initial Thoughts: About 1.5 years ago, I competed in and won this AI innovation contest, it was peer voted by a lot of Fortune 50 directors and I designed an AI interface for dynamic, collaborative brainstorming. My concept featured a left-side pane “mission control” panel that mirrored changes into the workspace—something I hadn’t really seen until this OpenAI launch with Canvas. Turns out, I might have been onto something! That said, after trying it out, Canvas mainly just makes ChatGPT’s suggestions editable, which is nice but not exactly groundbreaking. It’s a step up, but I was hoping for something a bit more dynamic.

Day 5: Increased Apple Intelligence Integrations (Green)

Day 5 was a little slow again and featured deeper integrations with Apple Intelligence, enhancing Siri across iOS, iPadOS, and macOS devices. Available on newer hardware like the iPhone 16 series and Macs with the M1 chip or later, the update allows users to access ChatGPT features like image and document analysis directly through Siri. OpenAI’s integration works with all subscription tiers and allegedly operates within Apple’s privacy framework, giving users smarter, AI-enhanced assistance across their devices.

My Initial Thoughts: After this day 5 Shipmas announcement I saw a flooding of articles asking questions about how data is being used through your apple devices by openAI. Theyre saying it works within Apple’s privacy framework.. I don’t know if I have a reason to doubt that but… my gut says otherwise. The more external entities that are involved, the harder it is to truly guarantee privacy.

What’s clear is OpenAI is digging in deep with Apple, which is interesting since Microsoft has such a large stake with them. Maybe that’s a sign Apple Intelligence isn’t as strong as it seemed, which honestly makes me sad—but why else would they be leaning so heavily on this partnership?

Day 6: Advanced Voice with Video (Yellow)

Day 6 introduced Advanced Voice with Video, blending ChatGPT's voice features with video capabilities. Now, Plus and Pro users can have “video calls” with screen sharing directly through the mobile app, letting you show your surroundings or share your screen during conversations. OpenAI also launched a fun Santa Mode for seasonal chats. While most countries have access, users in parts of Europe will need to wait, with enterprise and education rollouts planned for January.

My Initial Thoughts: Well this was super cool, gotta admit. But I love how openAI basically made it clear the EU is being punished for their regulations. Very subtle way of making the EU look like the bad guy instead of guaranteeing a certain right of data protection.

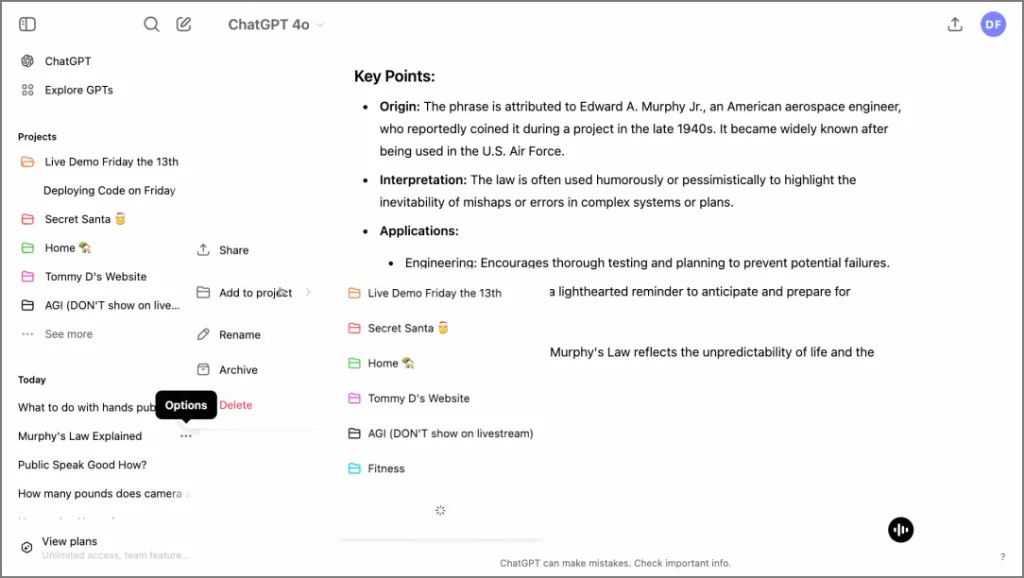

Day 7: “Projects” and Folder Organization (Red)

"Projects," were launched on day 7, a new feature that helps users organize their workflow by grouping related conversations, files, and tasks in one central location. Not much more to say than that.

My Initial Thoughts: This feels similar to Anthropic’s “Projects” feature but honestly already a gamechanger for me because I am a chatgpt user after all. Finally can organize chats and files together.

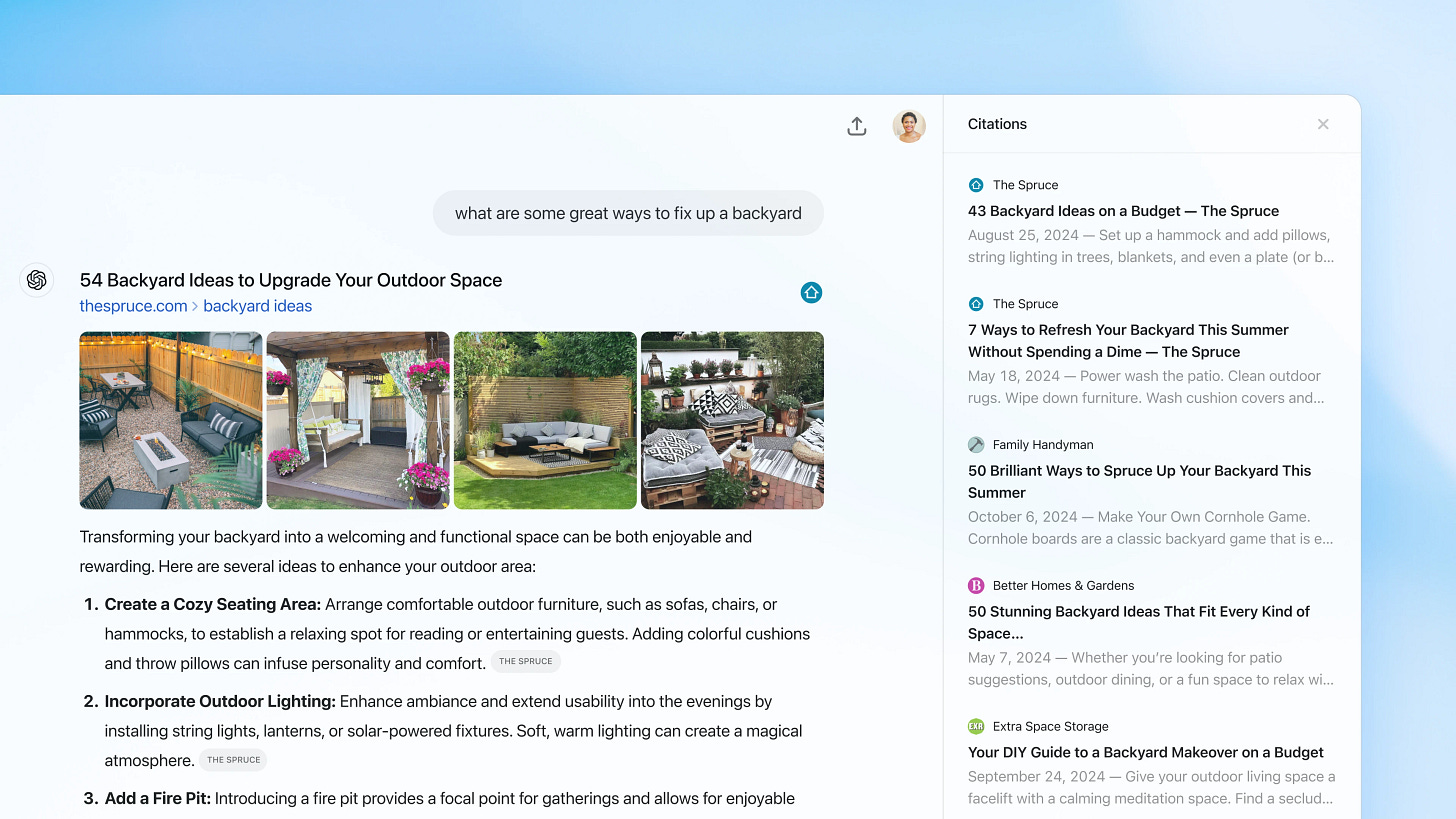

Day 8: ChatGPT Search (Red)

Day 8 meant ChatGPT search, now available to all users, including free accounts. It lets ChatGPT pull real-time info like news, sports scores, and stock updates directly from the web. Users can manually trigger searches or let ChatGPT decide when to search, with reference links provided for verification. There’s also a map interface and voice search integrated now. It can be used through ChatGPT directly or as a browser extension.

My Initial Thoughts: I say it over and over and over again, how we’re obtaining information online is changing. I hate the default Google SERPs now. So as evident of what ive been trying to tell you all for a year, im going to end this section with a quote from OpenAI: “We are convinced that AI search will be, in a near future and for the next generations, a primary way to access information.”

Drop mic. Go watch my video on Generative Engine Optimization so you can understand the research optimizing your content as SEO gets pushed out of the game.

Day 9: The Day for Devs (Green)

Day 9 was kind of a mini dev day as it focused on developers, with OpenAI releasing its o1 model through the API platform, adding features like function calling, computer vision processing, and developer messages. GPT-4o audio pricing dropped by 60%, with a new GPT-4o mini option offering even cheaper rates. OpenAI also introduced Preference Fine-Tuning for easier model customization and launched beta SDKs for Go and Java, expanding tools for building AI solutions.

My Initial Thoughts: Cool. That’s all.

Day 10: 1-800-ChatGPT (Green)

Day 10 brought a playful twist: OpenAI launched voice and messaging access to ChatGPT via a toll-free number (1-800-CHATGPT) and WhatsApp. U.S. users get 15 minutes of free monthly calls, while global users can message through WhatsApp. OpenAI pitched this as a way to make AI accessible for people without reliable internet, though it’s more of an experimental feature with limited functionality compared to the full ChatGPT service

My Initial Thoughts: This is cool I guess, even if it’s just a way to harvest your voice print for free. I won’t be using it. You can… if you want.

Day 11: Expanded Desktop Integrations (Green)

Day 11 focused on expanded desktop integrations for ChatGPT, adding support for coding tools like JetBrains VS Code, and text editors. Productivity apps like Apple Notes, Notion, and Quip also joined the mix, with Advanced Voice Mode compatibility for desktop use.All of these integrations require manual setup thought and are only available to paid subscribers.

My Initial Thoughts: Also not much to say here. Concerned about data privacy. What else is new.

Day 12: Preview of New o3 Models (Red)

Day 12 wrapped up Shipmas with a preview of OpenAI’s o3 and o3-mini models, marking a leap in AI reasoning and interaction. Early evaluations highlight o3’s advanced capabilities, achieving a 2727 rating on Codeforces programming contests and a 96.7% score on AIME 2024 mathematics problems. These results reflect a focus on handling extremely complex, logic-driven tasks at near-human performance levels.

What sets o3 apart is the use of “deliberative alignment,” a training approach that teaches models to explicitly reason through human-written safety specifications. By integrating chain-of-thought (CoT) reasoning, deliberative alignment allows o3 to reflect on user prompts, identify relevant policy text, and draft responses that are safer and more transparent. Unlike traditional approaches, this technique achieves high precision without relying on human-labeled data. OpenAI believes deliberative alignment is a promising step toward combining powerful AI capabilities with robust safety measures, setting o3 apart from its predecessors like GPT-4o. OpenAI is also inviting safety and security researchers to test these models before their public release, ensuring robust scrutiny before launch.

My Initial Thoughts: if you saw some flurries of articles talking about how OpenAI is really close to the AGI performance benchmark, this was the launch they were talking about, but since it’s not publicly launched we can’t really comment on it yet. If you want to understand CoT reasoning and kind of how they’re doing this, watch this video on my channel about it.